Appearance

Tests & Schedules

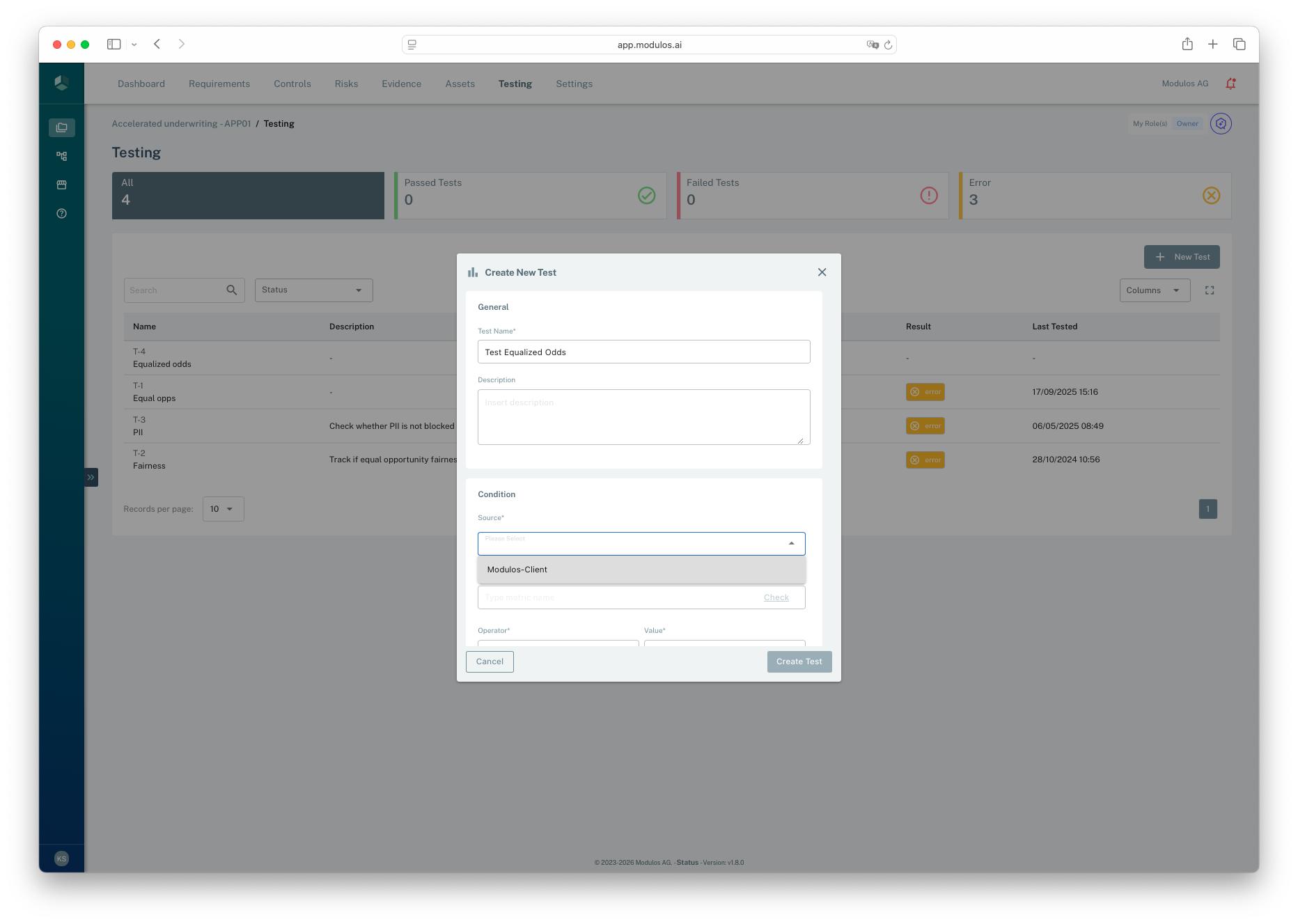

Tests define a condition that must hold for a metric. Schedules define when Modulos evaluates that condition so you can continuously verify control outcomes.

What this is

In Modulos, a test is intentionally simple:

- one source

- one metric

- one operator

- one expected value

- optional schedule

This simplicity is a feature. It keeps tests legible for reviewers and auditors and makes “what does this prove” easy to answer.

Where in Modulos

Project → Testingto view, create, and filter testsProject → Testing → select a testto run, edit, and review resultsProject → Settings → Sourcesto configure the sources your tests depend on

- 1Test detailsName and describe what the test is verifying.

- 2Select sourceChoose which source the metric comes from.

- 3Metric and validationEnter a metric name and validate it against the source.

- 4Create TestSave the test. You can add a schedule after creation.

Who can do what

Permissions

- Owners and Editors can create, edit, enable, and run tests.

- Reviewers and Auditors can view tests and results, but do not execute or modify them.

How test conditions work

Operators and metric types

The available operators depend on the metric type:

| Metric type | Supported operators | Examples |

|---|---|---|

| String | eq, ne | “status equals ok” |

| Integer / Float | gt, gte, eq, lt, lte, ne | “error rate less than 0.01” |

Evaluation behavior

When a test runs, Modulos retrieves the latest available value for the metric within a lookback window and evaluates the condition.

This makes tests robust to timing jitter and aligns with how observability platforms behave: you almost always care about “the most recent signal”, not “a datapoint at an exact second”.

Scheduled run

Runs on schedule (e.g., daily)

Evaluation

1

Fetch latest datapoint

2

metric < threshold3

Emit result

Passed

Failed

Error

Tests evaluate the most recent signal available in the window.

Schedules

Tests can run on a schedule or be executed manually.

Schedules include:

- a frequency: daily, weekly, monthly

- a time of day

Schedule times are shown in your local timezone.

Schedule semantics

- Weekly runs on Sundays.

- Monthly runs on the first day of the month.

Ownership and linking

Each test has:

- an assignee, who is responsible for investigating failures and errors

- optional links to controls, so results are traceable to governance statements

Linking is most effective when the control is already implemented. In the current UI, tests are associated to controls that are in the Executed state.

How to use it

1

Choose a control outcome

Pick a signal that reflects whether the control is working

2

Select a source

Use Prometheus or Datadog for pull metrics, or Modulos Client for pushed metrics

3

Define the condition

Pick operator and expected value based on metric type

4

Add a schedule

Run on a cadence that matches how quickly drift matters

5

Assign and link

Assign an owner and link the test to the relevant control

Important considerations

- Use tests to verify outcomes, not to mirror every implementation detail. Fewer high-signal tests beat many noisy tests.

- If a test frequently returns error, treat it as an integration or observability problem, not a control failure.

- Be explicit about units and direction: “p95 latency less than 250 ms” is clearer than “latency ok”.

- Higher-frequency schedules can create alert fatigue. Start with daily or weekly unless you truly need faster feedback.